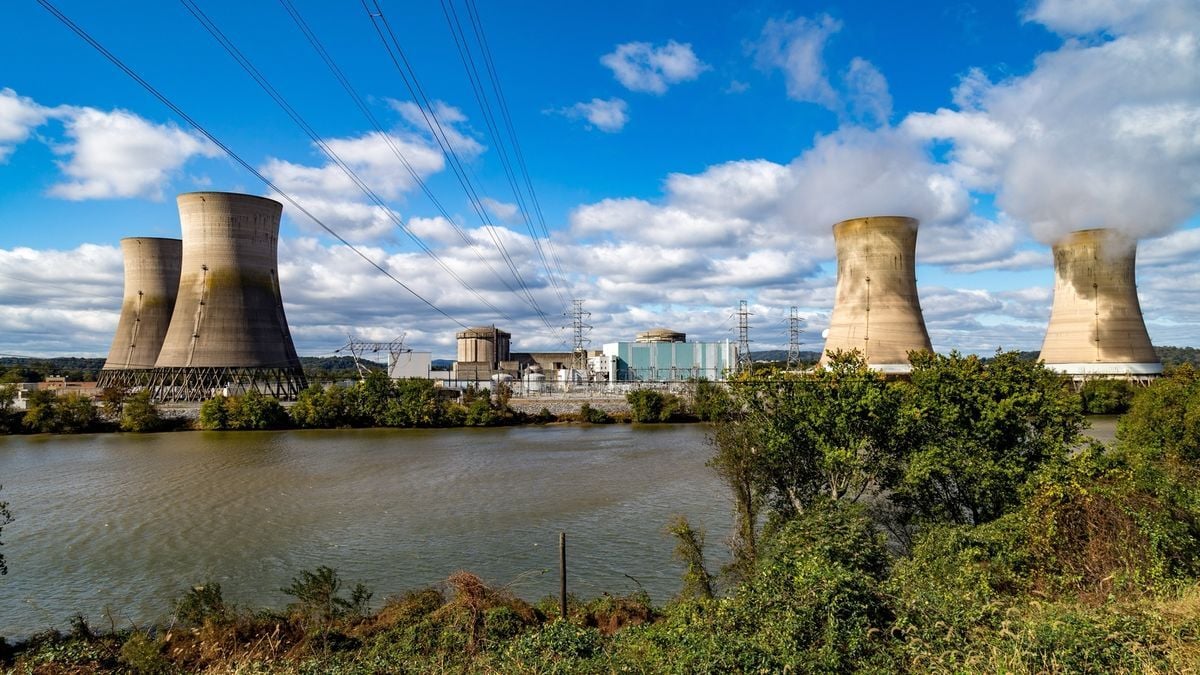

Modern AI data centers consume enormous amounts of power, and it looks like they will get even more power-hungry in the coming years as companies like Google, Microsoft, Meta, and OpenAI strive towards artificial general intelligence (AGI). Oracle has already outlined plans to use nuclear power plants for its 1-gigawatt datacenters. It looks like Microsoft plans to do the same as it just inked a deal to restart a nuclear power plant to feed its data centers, reports Bloomberg.

I think when you start looking at how expensive other forms of green energy are (like wind) long term, nuclear looks really good. Short term, yeah it’s expensive, but we need long term solutions.

I don’t think that math works out, even when looking over the entire 70+ year life cycle of a nuclear reactor. When it costs $35 billion to build two 1MW reactors, even if it will last 70 years, the construction cost being amortized over every year or every megawatt hour generated is still really expensive, especially when accounting for interest.

And it bakes in that huge cost irreversibly up front, so any future improvements will only make the existing plant less competitive. Wind and solar and geothermal and maybe even fusion will get cheaper over time, but a nuclear plant with most of its costs up front can’t. 70 years is a long time to commit to something.

Can you explain how wind and solar get cheaper over time? Especially wind, those blades have to be replaced fairly often and they are expensive.

With nuclear, you’re talking about spending money today in year zero to get a nuclear plant built between years 5-10, and operation from years 11-85.

With solar or wind, you’re talking about spending money today to get generation online in year 1, and then another totally separate decision in year 25, then another in year 50, and then another in year 75.

So the comparison isn’t just 2025 nuclear technology versus 2025 solar technology. It’s also 2025 nuclear versus 2075 solar tech. When comparing that entire 75-year lifespan, you’re competing with technology that hasn’t been invented yet.

Let’s take Commanche Peak, a nuclear plant in Texas that went online in 1990. At that time, solar panels cost about $10 per watt in 2022 dollars. By 2022, the price was down to $0.26 per watt. But Commanche Peak is going to keep operating, and trying to compete with the latest and greatest, for the entire 70+ year lifespan of the nuclear plant. If 1990 nuclear plants aren’t competitive with 2024 solar panels, why do we believe that 2030 nuclear plants will be competitive with 2060 solar panels or wind turbines?

They have to be competitive with solar panels & grid-scale energy storage costs combined. You can’t leave off 90% of the cost and call it a win. Unless you are fine pairing solar panels with natural gas as we currently do; but that defeats much of the purpose of going carbon-free.

They aren’t competitive with 2024 solar panels paired with natural gas. But, again, is that really the world you are advocating for?

Yes, I am, especially since you seem to be intentionally ignoring wind+solar. It’s much cheaper to have a system that is solar+wind+nat gas, and that particular system can handle all the peaking and base needs today, cheaper than nuclear can. So nuclear is more expensive today than that type of combined generation.

In 10 years, when a new nuclear plant designed today might come on line, we’ll probably have enough grid scale storage and demand-shifting technology that we can easily make it through the typical 24-hour cycle, including 10-14 hours of night in most places depending on time of year. Based on the progress we’ve seen between 2019 and 2024, and the projects currently being designed and constructed today, we can expect grid scale storage to plummet in price and dramatically increase in capacity (both in terms of real-time power capacity measured in watts and in terms of total energy storage capacity measured in watt-hours).

In 20 years, we might have sufficient advanced geothermal to where we can have dispatchable carbon-free electricity, plus sufficient large-scale storage and transmission that we’d have the capacity to power entire states even when the weather is bad for solar/wind in that particular place, through overcapacity from elsewhere.

In 30 years, we might have fusion.

With that in mind, are you ready to sign an 80-year mortgage locking in today’s nuclear prices? The economics just don’t work out.

Wind and solar also have to be paired with either cheap natural gas or energy storage systems that are often monstrously expensive. Unfortunately these numbers are almost always left out when one discusses prices.

People do appreciate the lights staying on, after all.

Yeah, we haven’t even gotten into the reliability. The have dead times where no output is created that nuclear doesn’t suffer from.