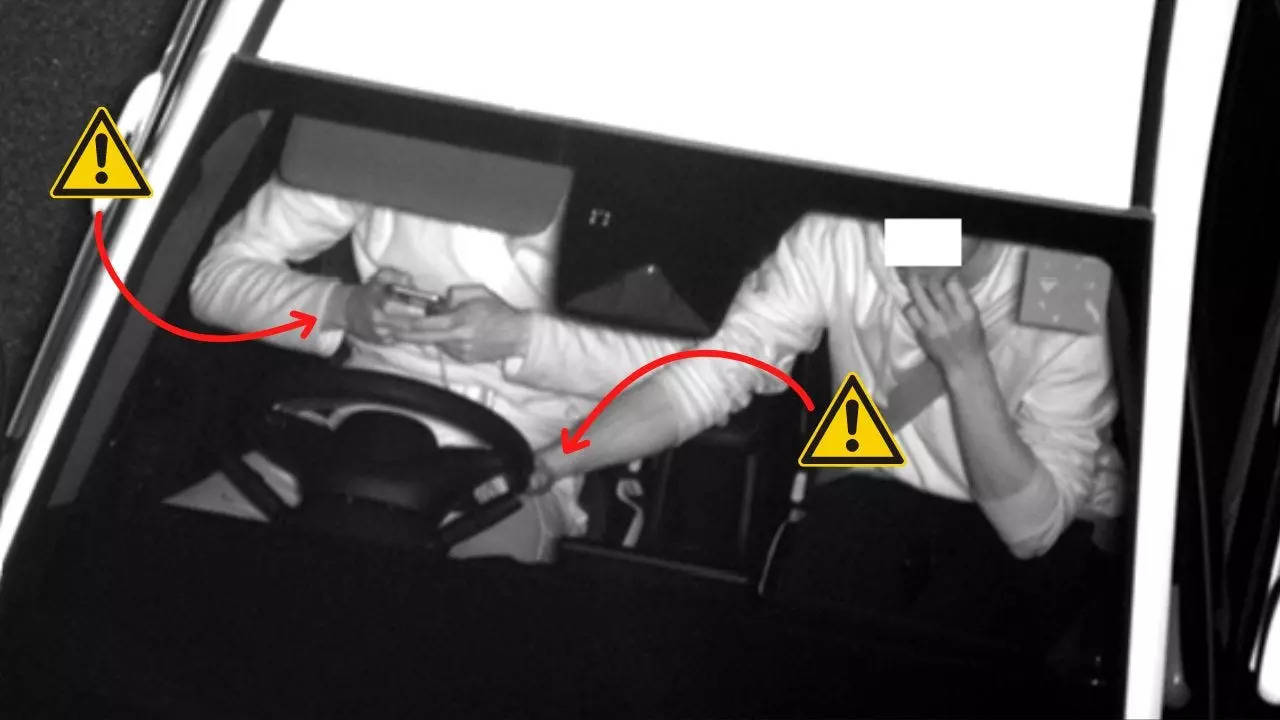

Police in England installed an AI camera system along a major road. It caught almost 300 drivers in its first 3 days.::An AI camera system installed along a major road in England caught 300 offenses in its first 3 days.There were 180 seat belt offenses and 117 mobile phone

Calling an image recognition system a robot enforcing the law is such a stretch you’re going to pull a muscle.

It’s going to disproportionately target minorities. ML* isn’t some wonderful impartial observer, it’s subject to all the same biases as the people who made it. Whether the people at the end of the process are impartial or not barely matters either imo, they’re going to get the biased results of the ML looking for criminals so it’s still going to be a flawed system even if the human element is OK. Ffs please don’t support this kind of dystopian shit, Idk how it’s not completely obvious how horrifying this stuff is

*what people call AI is not intelligent at all. It uses machine learning, the same process as chatbots and autocorrect. AI is a buzzword used by tech bros who are desperate to “invest in the future”

deleted by creator

Face recognition data sets and the like tend to be pretty heavily skewed, they usually have a lot more white people than poc. You can see this when ML image filters turn black people into white people or literal gorillas. Unless the data set properly represents a super diverse set of people (and tbh probably even if it does), there’s going to be a lot of race based false positives/negatives

deleted by creator

That might be the case tbh, but either way that would be bad and discriminatory. I might just be overthinking it, it might not actually be that bad, but I know discrimination like that is super common when it comes to how recognition-based ML is trained

But how is that different or worse from a human sitting at the side of the road and writing down number plates for example?

Tbh part of my response to this is just knee-jerk reaction, this specific application might not be a bad idea, but I’m terrified of the surveillance state this type of stuff is warming us up for. There’s already talk of cops in US and China and probably other places planning to use ML like this to pore over security footage and find criminals/track people in general. To me this sounds like England’s first dip into that authoritarian pool, a proof of concept to see how viable it is keeping the entire country under 24/7 surveillance

The image recognition system detects a cell phone being used and snaps a photo, records the plate number, etc. How exactly does that lead to racism?

You’re making what amounts to a slippery slope argument, and that’s often a very flawed way of thinking.